Prometheus is a robust Kubernetes (K8s) monitoring and alerting tool. With over 35,000 GitHub stars and status as a Cloud Native Computing Foundation graduated project, the open source Prometheus has become one of the most popular components of the Kuberetes monitoring stack.

Because of its extensibility, deploying and maintenance of Prometheus can be complex to deploy and maintain. To simplify that complexity, Prometheus Operator provides Kubernetes native deployment and management of the components of a Prometheus monitoring stack. Here, we’ll take a look at the basic concepts behind Prometheus Operator and provide a step-by-step walkthrough to help you get started.

Prometheus Operator Benefits

Simply put: the core benefit of Prometheus Operator is simple and scalable deployment of a full Prometheus monitoring stack.

Traditionally, without Prometheus Operator customization and configuration of Prometheus is complex. With Prometheus Operator, K8s custom resources allow for easy customization of Prometheus, Alertmanager, and other components. Additionally, the Prometheus Operator Helm Chart includes all the dependencies required to stand up a full monitoring stack.

However, the single biggest upside is: with Prometheus Operator, Prometheus Target configuration does NOT require learning a Prometheus specific configuration language.

Instead you can generate monitoring target configurations using standard K8s label queries.

Understanding Prometheus Operator Custom Resource Definitions

Prometheus Operator orchestrates Prometheus, Alertmanager and other monitoring resources by acting on a set of Kubernetes Custom Resource Definitions (CRDs). Understanding what each of these CRDs does will allow you to better optimize your monitoring stack. The supported CRDs are:

- Prometheus- Defines the desired state of a Prometheus Deployment

- Alertmanager- Defines the desired state of a Alertmanager Deployment

- ThanosRuler- Defines the desired state of a ThanosRuler Deployment

- ServiceMonitor- Declaratively specifies how groups of Kubernetes services should be monitored. Relevant Prometheus scrape configuration is automatically generated.

- PodMonitor- Declaratively specifies how groups of Kubernetes pods should be monitored. Relevant Prometheus scrape configuration is automatically generated.

- Probe- Declaratively specifies how ingresses or static targets should be monitored. Relevant Prometheus scrape configuration is automatically generated.

- PrometheusRule- Defines the desired state of a Prometheus Alerting and/or recording rules. Relevant Prometheus rules file is automatically generated.

- AlertmanagerConfig- Declaratively specifies subsections of the Alertmanager configuration, allowing routing of alerts to custom receivers, and setting inhibit rules.

Getting Started With Prometheus Operator

With an understanding of the benefits of Prometheus Operator and what CRDs are, let’s dive into our step-by-step example. Here, we’ll walkthrough installing Prometheus Operator and configuring it to monitor a service in a cluster with the label k8s-app: my-app.

Step 1- Install Prometheus Operator

The first step to set-up your monitoring stack is to install the Prometheus Operator and relevant CRDs in your cluster. Prometheus requires Grafana, node-exporter, and kube-state-metrics to create a complete K8s monitoring stack. Fortunately, all the required components are included as dependency charts in the Prometheus Operator Helm Chart.

You can install Prometheus Operating using Helm like this:

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈

$ helm install prom-operator-01 prometheus-community/kube-prometheus-stack

NAME: prom-operator-01

LAST DEPLOYED: Thu May 6 15:14:30 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace default get pods -l "release=prom-operator-01"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

$ kubectl --namespace default get pods

NAME READY STATUS RESTARTS AGE

alertmanager-prom-operator-01-kube-prom-alertmanager-0 2/2 Running 0 10m

prom-operator-01-grafana-6c68475d4d-cjxxk 2/2 Running 0 10m

prom-operator-01-kube-prom-operator-96f558d77-v4cwf 1/1 Running 0 10m

prom-operator-01-kube-state-metrics-6749546fc7-998kx 1/1 Running 0 10m

prom-operator-01-prometheus-node-exporter-btvs5 1/1 Running 0 10m

prom-operator-01-prometheus-node-exporter-btvzh 1/1 Running 0 10m

prom-operator-01-prometheus-node-exporter-nfb2n 1/1 Running 0 10m

prometheus-prom-operator-01-kube-prom-prometheus-0 2/2 Running 1 10m

$ kubectl --namespace default get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 10m

kubernetes ClusterIP 10.7.240.1 <none> 443/TCP 3h51m

prom-operator-01-grafana ClusterIP 10.7.241.87 <none> 80/TCP 10m

prom-operator-01-kube-prom-alertmanager ClusterIP 10.7.254.118 <none> 9093/TCP 10m

prom-operator-01-kube-prom-operator ClusterIP 10.7.244.210 <none> 443/TCP 10m

prom-operator-01-kube-prom-prometheus ClusterIP 10.7.246.195 <none> 9090/TCP 10m

prom-operator-01-kube-state-metrics ClusterIP 10.7.252.122 <none> 8080/TCP 10m

prom-operator-01-prometheus-node-exporter ClusterIP 10.7.251.10 <none> 9100/TCP 10m

prometheus-operated ClusterIP None <none> 9090/TCP 10m

Step 2 – Configure-port forwarding for Grafana

Next, configure port-forwarding for the Grafana service

At this point, you can navigate to localhost:8080 and you should be able to view the Grafana UI.

$ kubectl port-forward svc/prom-operator-01-grafana 8080:80

Forwarding from 127.0.0.1:8080 -> 3000

Forwarding from [::1]:8080 -> 3000

Handling connection for 8080

Handling connection for 8080

Handling connection for 8080

...As an alternative to Grafana, you may also use a hosted Monitoring as a Service (MaaS) compatible with Prometheus that also natively supports PromQL. The advantages of using such a service are:

- Support for various UI widgets to customize dashboards

- A single platform combining visualization, alerting, and automation

- A long list of supported integrations

- Sophisticated alerting based on machine learning

- Avoid the need to manage and scale your own metrics database

- Premium support services

Step 3- Create a ServiceMonitor CRD

Now we can begin configuring service monitoring for k8s-app: my-app. To monitor this service, first create a create a ServiceMonitor resource using the manifest below:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: my-app

labels:

env: staging

spec:

selector:

matchLabels:

k8s-app: my-app

endpoints:

- port: apiWe also defined the env:staging label for the ServiceMonitor. This label is used by Prometheus Operator to find the ServiceMonitor. ServiceMonitor will monitor the ports named api on the containers which match the label selector above.

Step 4- Deploy a Prometheus instance

Next we need to deploy our Prometheus instance. Without the Prometheus Operator, this step can be cumbersome and require significant manual configuration of manifests. However, with Prometheus Operator, you can use the simple manifest below:

It is important to note the serviceMonitorSelector above maps a ServiceMonitors to the operator. The label for service monitor should match the label specified in the Prometheus manifest. In our case it is env: staging and therefore and the label matches in both places. PodMonitors, Probes and Rules follow the same convention.

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: prometheus

spec:

serviceAccountName: prometheus

serviceMonitorSelector:

matchLabels:

env: staging

resources:

requests:

memory: 200Mi

cpu: 2

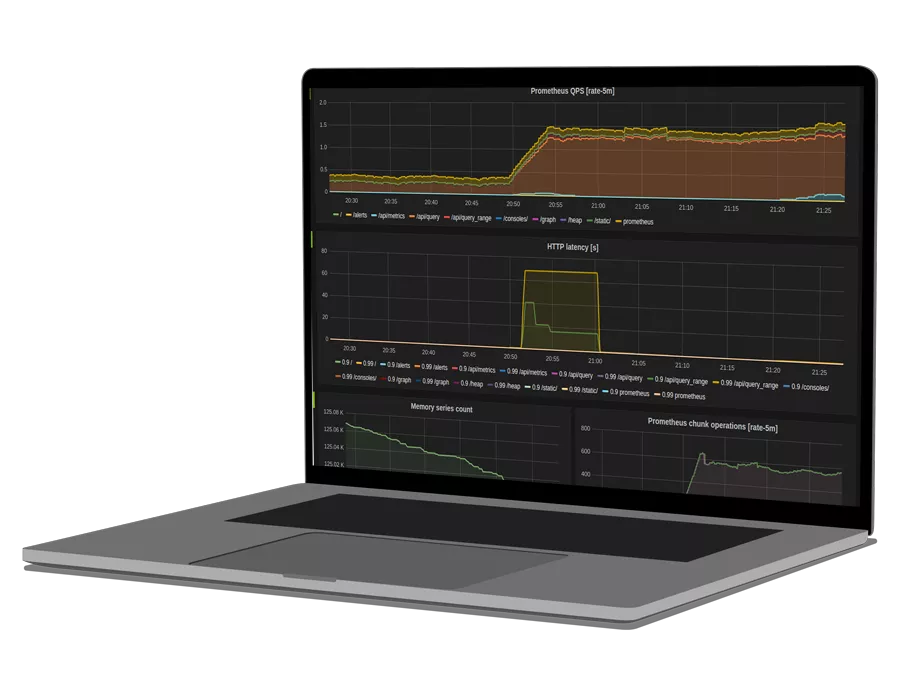

Once you have the Prometheus pod running metrics for the monitored endpoint will start showing up. These metrics can be viewed using the PrometheusUI or Grafana.

Additionally, you can create Probes and Alerting rules to customize your Prometheus and Alertmanager configuration.

Important notes

- Prometheus operator watches for namespaces for the Prometheus/Alertmanager CR. In most scenarios it watches all namespaces but you can configure it to watch for a particular namespace as well. This can be changed by using the

--namespaces=<desired-namespace-to-watch>flag on the prometheus operator namespace. spec.selector.matchLabelsMUST match your app’s name (k8s-app: my-appin our example) for ServiceMonitor to find the corresponding endpoints during deployment.- You can access the Prometheus UI by port-forwarding to the prometheus container or creating a service on top of it. In a production scenario you should not expose Prometheus since it should only act as a Grafana datasource. However, if you need to expose it is recommended to use an Ingress.

- Make sure Prometheus instances are configured to store metric data in persistent volumes so that it is preserved in between restarts.

- Prometheus Operator also supports orchestrating Thanos for Prometheus clustering. More on that in the next blog 🙂

See how OpsRamp enhances Prometheus in minutes with Prometheus Cortex

Final thoughts

Prometheus Operator streamlines Prometheus configuration and deployment allowing you to quickly provision a production-grade monitoring stack. The Prometheus Operator Helm Chart can be easily managed using any CI/CD tool which makes automation and configuration management simple. For a deeper dive on Prometheus Operator, check out the official getting started guide. To avoid your administrative burden consider a Monitoring as a Service provider that natively supports Prometheus and PromQL.

To learn more about Thanos, check out its official documentation

You like our article?

You like our article?

Follow our monthly hybrid cloud digest on LinkedIn to receive more free educational content like this.

Powered By Prometheus Cortex

Learn MoreNative support for PromQL across dozens of integrations

Automated event correlation powered by machine learning

Visual workflow builder for automated problem resolution