Containerization is hot right now. In 2020, 86% of technology leaders reported prioritizing containerization projects for their applications; by 2023, 70% of global businesses are expected to be running at least two containerized applications in a production environment. With such explosive adoption rates, more and more organizations debate whether to embrace the containerization trend or stay put on virtual machines. In this chapter, we’ll review the basics of both solutions and make a direct comparison.

The simplest way to think of a container in relation to a virtual machine (VM) is that a VM virtualizes a physical server, whereas a container virtualizes an operating system (OS). The OS that containers virtualize may run on either a physical server or a virtual machine. But before we dig deeper into explaining each, let’s quickly review the key benefits of both containers and virtual machines.

| Container Benefits | Virtual Machine Benefits |

|---|---|

| Small memory footprint since the OS instance is shared across containers | You can install different OS types (Linux, Windows) in VMs hosted on the same server |

| Launch a new instance in seconds | Launch a new instance in minutes |

| Ideal for maintaining a fixed preset configuration and replacing it as quickly as needs change | Ideal for changing its configuration over time as you would when using a physical server |

| Ideal for a lifespan (from launch to termination) ranging from minutes to days | Ideal for a lifespan ranging from days to years |

| Ideally suited to host microservices | Ideally suited to support client-server applications or to host containers |

What is a Virtual Machine?

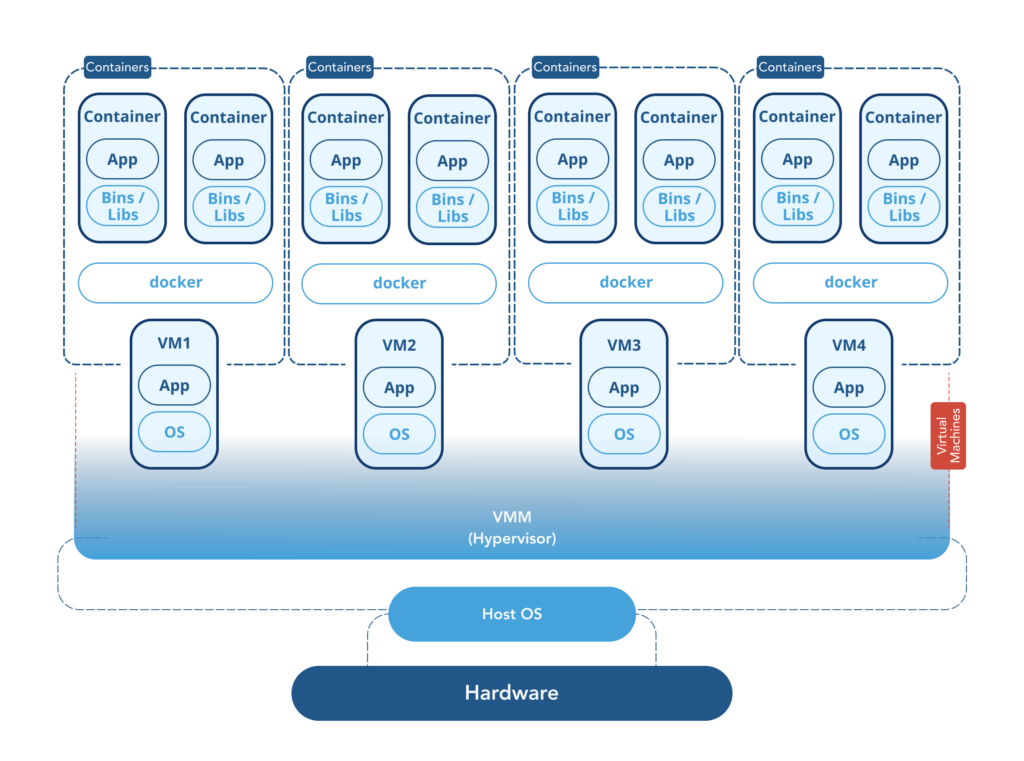

A virtual machine creates a software entity that functions the same way a computer system would. To run VMs, your physical machines must have a virtualization software known as a hypervisor (or Virtual Machine Monitor) to host the VMs and handle VM management. Even though VMware vSphere is the most popular commercially-licensed hypervisor, Microsoft Hyper-V is a common choice in a Windows environment, while free versions such as XEN (an early open source hypervisor project) and KVM (even though architected a bit differently) are commonly used to virtualize a Linux server. The hypervisor emulates the server’s physical hardware so that the installed operating system (Linux, Windows, or macOS) won’t know the difference between a VM and an underlying physical server. So each virtual machine has its dedicated OS and kernel. The memory footprint of a VM is almost as high as a small server since it requires a dedicated OS instance.

Virtual machines help organizations save on IT infrastructure costs by running many operating systems and applications on a small number of physical servers. VMs also allow you to configure an ideal environment for the changing needs of your applications over time.

What is Containerization?

Containerization aims to provide a consistent and portable way to deliver software applications through containing the application software and its dependencies in “boxes” that can be rapidly copied and multiplied to scale up or down based on changing workload demands.

Containers work because they package all of your application’s dependencies and configurations (such as system files, software libraries, and device drivers) to ensure a predictable and consistent runtime–regardless of which environment they are deployed on, provided a Container Runtime Environment (also known as OS-level virtualization) such as Docker is installed on the Operating System. Docker remains by far the most popular container runtime engine while containerd and CRI-O are the runner-ups.

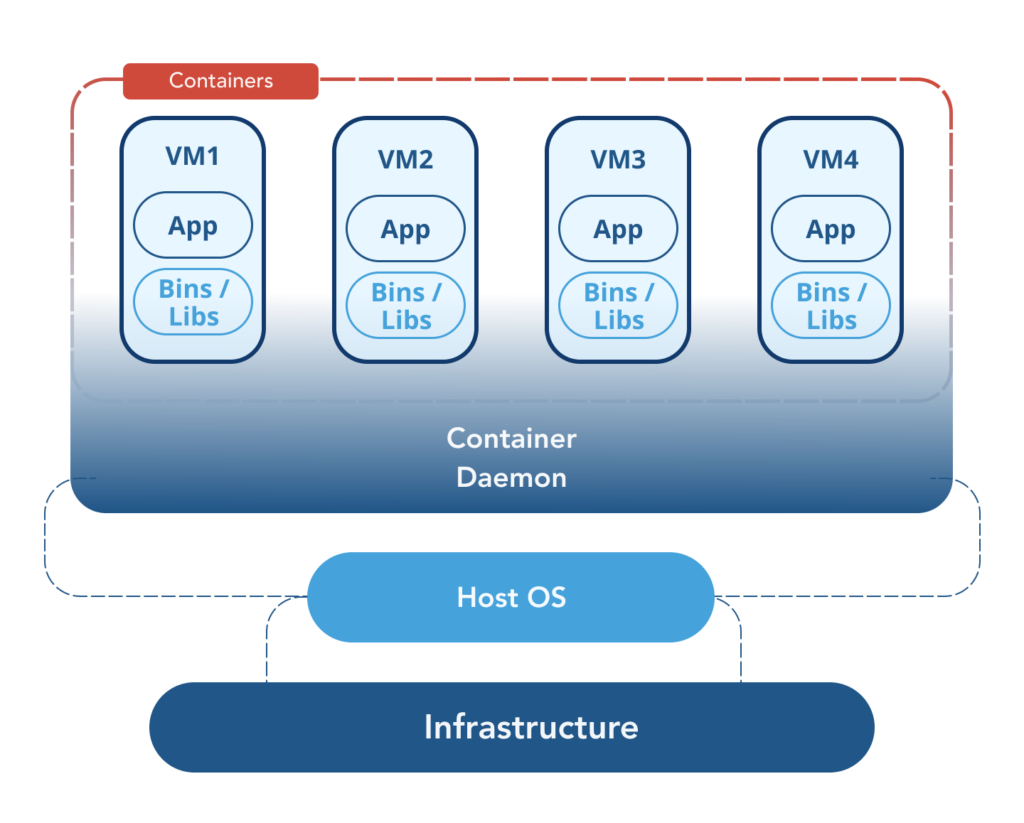

For illustration, see the following depiction of a container:

Host OS & The Kernel

The Hardware component represents a physical or virtual machine with an Operating System (OS). The OS has what is known as a kernel, which is a program that acts as a governing layer between all of the programs that run on the host machine and its physical components. The kernel plays a critical role in containerization, and because of this, you must deploy containers that are compatible with the underlying OS kernel (meaning containers are OS-specific).

Namespaces & Control Groups

Linux Operating System kernels have two distinct features known as namespacing and control groups.

- Namespacing: isolates resources (hard drive, networking, hostnames, users, etc) on a machine for a particular process

- Control Groups (CGroups): limits the amount of resources that a process can use

These two features combined allow you to isolate a single process (representing a container) and limit the amount of computing resources it can consume. Effectively, this combination is what we call containerization.

Although these features are unique to Linux, you can still use a Mac or Windows desktop machine. To do so, simply install a Container Runtime Environment such as Docker (or use native tools like Hyper-V and HyperKit) and deploy a Linux virtual machine to host the containers (while your desktop continues to function as before). The Linux VM provides the required kernel used to constrain access to the hardware resources on your machine.

Containers vs VMs

Containers can be much lighter than VMs because they don’t need their own OS to run. As a result, you can run multiple containers on a single server without wasting as much of your host machine’s resources. This setup also translates into less computational overhead and faster startup times.

Here is a side-by-side visual comparison of their architectural differences:

Let’s summarize the information that we covered in this article in the form of a tabular comparison:

| Containers | VMs |

|---|---|

| OS-level virtualisation | Hardware-level virtualization |

| Share a single host OS | Dedicated OS and kernel |

| Lightweight (computing footprint) | Relatively heavyweight |

| Quick startup time (seconds) | Slower startup time (minutes) |

| Process-level isolation | Fully isolated system |

| Much lower costs of running containers | Enterprise virtualization technology is relatively costly |

Although VMs are heavier, slower, and more costly, they still have a critical role to play in application delivery. VMs are critical to optimizing server hardware usage in a data center environment. In fact, the most popular AWS service is still the EC2 which is nothing other than a virtual machine running on a hypervisor hosted on an physical AWS server. The EC2 is often used by AWS clients to host a container runtime engine. Together, both technologies are extremely powerful and complimentary. When it comes to hosting applications architected to use microservices (see our next article), however, containers are the answer.

You like our article?

You like our article?

Follow our monthly hybrid cloud digest on LinkedIn to receive more free educational content like this.