Today, Kubernetes is the container orchestration solution to use. What began as an idea between Craig McLuckie, Joe Beda, and Brendan Burns in 2013 has quickly grown to become one of the most popular open-source projects in the world. Even as the leading solution, Kubernetes continues to experience exponential growth from enterprise adoption. And for the last two years, developers on StackOverflow have ranked Kubernetes as one of the most “loved” and “wanted” technologies.

This surge in popularity is directly tied to the rapid adoption of containers — like Docker containers — in application delivery. Containers have been at the heart of modern microservices architectures, cloud-native software, and DevOps workflows.

However, orchestrating container deployments can be difficult, time-consuming, and complex to scale without the right tools. Kubernetes is purpose-built to address these challenges. K8s (a shorthand where the number 8 represents the eight letters between “K” and “s”) greatly reduces the complexity and manual work of container orchestration. As a result, major enterprises including Google, Spotify, Niantic, The New York Times, Asana, and China Unicom have used Kubernetes to streamline and scale up their container deployments.

In this article, we’ll explore the history of Kubernetes and the main reasons it has grown to become the most popular Container Orchestration Engine available today.

The History of Kubernetes

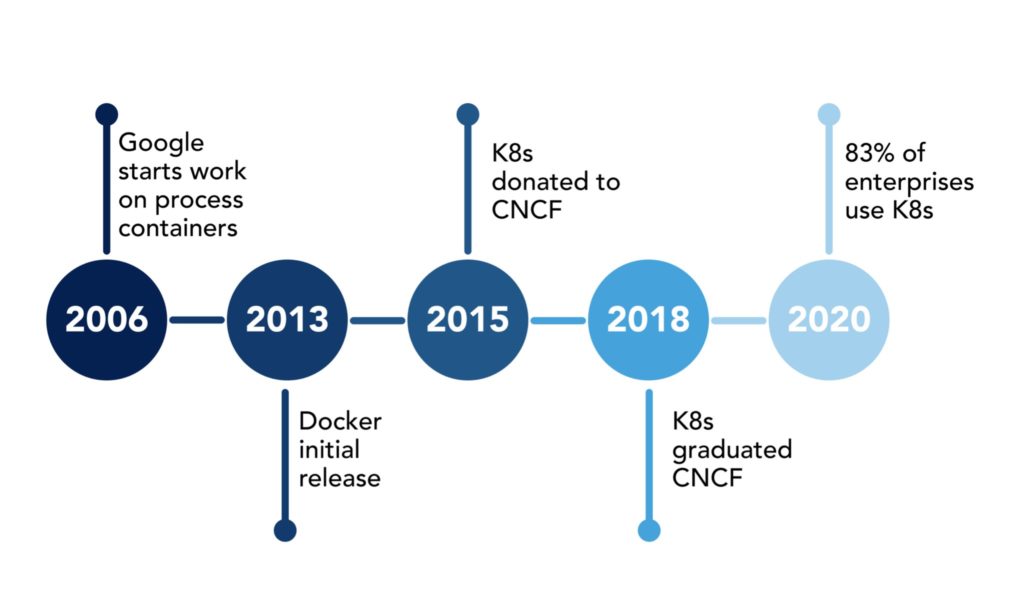

Kubernetes has its roots in Google’s internal Borg System, introduced between 2003 and 2004. Later, in 2013, Google released another project known as Omega, a flexible, scalable scheduler for large compute clusters. In that same year, McLuckie, Beda, and Burns set out to develop a “minimally viable orchestrator.” The desired set of essential features for the orchestrator included:

- Replication: to deploy multiple instances of an application

- Load balancing and service discovery: to route traffic to these replicated containers

- Basic health checking and repair: to ensure a self-healing system

- Scheduling: to group many machines into a single pool and distribute work to them

The following year, Kubernetes was released.Today, Kubernetes has 1800+ contributors, 500+ meetups worldwide, and 42,000+ users (many of them joining the public #kubernetes-dev channel on Slack). 83% of enterprises surveyed by the Cloud Native Computing Foundation (CNCF) in 2020 are using Kubernetes.

4 Reasons for Kubernetes’ Popularity

#1 The Rise of Containers

Containers paved the way for Kubernetes. But what’s a container?

Containers are lightweight software components that bundle or package an entire application and its dependencies (such as software libraries) and configuration (such as network settings) to run as expected. This approach is becoming increasingly popular as an alternative to Virtual Machines when it comes to application portability.

Containerization of applications brings a number of benefits, including the following:

- Portability: Containers are truly “write once, run anywhere” (cloud, physical server, virtual server, etc.)

- Efficiency: Containers use fewer resources than virtual machines (VMs) and better utilize computing resources.

- Agility: With containers, developers can easily integrate into — or develop new — automated DevOps code delivery pipelines.

- Higher development throughput: Containers are ideal for hosting microservices owned by smaller independent development teams. As a result, they can deliver application upgrades faster.

- Faster start-up: Containers virtualize the operating system the same way virtual machines virtualize the physical server hardware. As such, they are lightweight, which helps them launch in seconds instead of minutes.

- Flexibility: Containers can run on virtual machines or bare metal hardware.

To take advantage of all these benefits at scale, software teams required orchestration tools to deploy and manage hundreds or thousands of containers which drives the adoption of Kubernetes.

#2 The Rise of Cloud

The growth of cloud computing has also been a major contributing factor to Kubernetes’ widespread adoption. Cloud computing offers businesses the opportunity to use as many resources as they need when they need them. The pay-per-use model combined with the ability to rapidly provision and decommission resources make it an ideal platform for hosting a Kubernetes cluster requiring varying node count to accommodate changing workloads.

Kubernetes, by nature, is a cloud-agnostic system that allows companies to provision the same containers across public clouds and private clouds (also referred to as the hybrid cloud). The hybrid cloud model is a popular choice for enterprises, making Kubernetes an ideal solution for their use case.

Benefits of Kubernetes for hybrid cloud models include:

- Consistency across on-premise and public cloud.

- Portability of workload across platforms.

- Automation of provisioning processes spanning data center and cloud.

- Automated scaling of computing resources to maintain performance.

# 3 The Declarative Model

Before the release of Kubernetes, comparable tools automated the step-by-step procedures of deployment activities. But Kubernetes took a different approach that declares what the desired state of the system should be. Once the desired state is defined, Kubernetes continuously updates the underlying configuration necessary to achieve and maintain the targeted state.

This declarative paradigm removes the complexity of planning every step involved in the deployment and scaling processes and is therefore significantly more scalable in large environments.

#4 Extensibility

Kubernetes is a highly extensible platform consisting of native resource definitions such as Pods, Deployments, ConfigMaps, Secrets, and Jobs. Each resource serves a specific purpose and is key to running applications in the cluster. Software developers can also add Custom Resource Definitions (CRD) via the Kubernetes API server.

Also, Kubernetes enables software teams to write custom Operators, a specific process running in a Kubernetes cluster that follows what is known as the control pattern. An Operator allows users to automate the management of Custom Resource Definitions by talking to the Kubernetes API. Because K8s is so open and extensible, it can meet the demands of a wide range of use cases, limiting the need for engineers to turn to other tools for container orchestration.

Conclusion

As the cloud-native space continues to grow, more businesses will look to cloud-computing solutions to offer elasticity. Going hand-in-hand with this elastic cloud model is the use of containers for easier portability and rapid delivery of application workloads.

While these paradigms have solved many problems, they’ve also introduced new administrative complexities at a large scale that require the automated orchestration offered by K8s. Kubernetes has received more attention and adoption than any other infrastructure technology in recent memory. This level of adoption should last for years to come since K8s is free, sophisticated, stable, and well ahead of any other competing technology.

You like our article?

You like our article?

Follow our monthly hybrid cloud digest on LinkedIn to receive more free educational content like this.

Consolidated IT Operations for Hybrid Cloud

Learn MoreA single platform for on-premises and multi-cloud environments

Automated event correlation powered by machine learning

Visual workflow builder for automated problem resolution